Quantum pipeline using the Quantum Trainer¶

import warnings

warnings.filterwarnings("ignore")

import os

os.environ["TOKENIZERS_PARALLELISM"] = "false"

import numpy as np

BATCH_SIZE = 30

EPOCHS = 120

SEED = 2

Read in the data and create diagrams¶

def read_data(filename):

labels, sentences = [], []

with open(filename) as f:

for line in f:

t = int(line[0])

labels.append([t, 1-t])

sentences.append(line[1:].strip())

return labels, sentences

train_labels, train_data = read_data('datasets/mc_train_data.txt')

dev_labels, dev_data = read_data('datasets/mc_dev_data.txt')

test_labels, test_data = read_data('datasets/mc_test_data.txt')

TESTING = int(os.environ.get('TEST_NOTEBOOKS', '0'))

if TESTING:

train_labels, train_data = train_labels[:2], train_data[:2]

dev_labels, dev_data = dev_labels[:2], dev_data[:2]

test_labels, test_data = test_labels[:2], test_data[:2]

EPOCHS = 1

Create diagrams¶

from lambeq import BobcatParser

parser = BobcatParser(verbose='text')

raw_train_diagrams = parser.sentences2diagrams(train_data)

raw_dev_diagrams = parser.sentences2diagrams(dev_data)

raw_test_diagrams = parser.sentences2diagrams(test_data)

Tagging sentences.

Parsing tagged sentences.

Turning parse trees to diagrams.

Tagging sentences.

Parsing tagged sentences.

Turning parse trees to diagrams.

Tagging sentences.

Parsing tagged sentences.

Turning parse trees to diagrams.

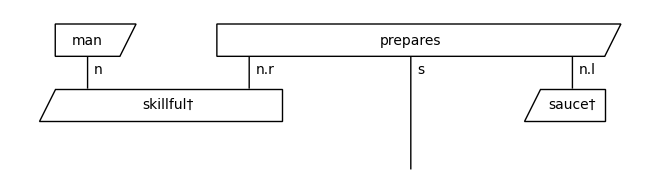

Remove the cups¶

from lambeq import RemoveCupsRewriter

remove_cups = RemoveCupsRewriter()

train_diagrams = [remove_cups(diagram) for diagram in raw_train_diagrams]

dev_diagrams = [remove_cups(diagram) for diagram in raw_dev_diagrams]

test_diagrams = [remove_cups(diagram) for diagram in raw_test_diagrams]

train_diagrams[0].draw()

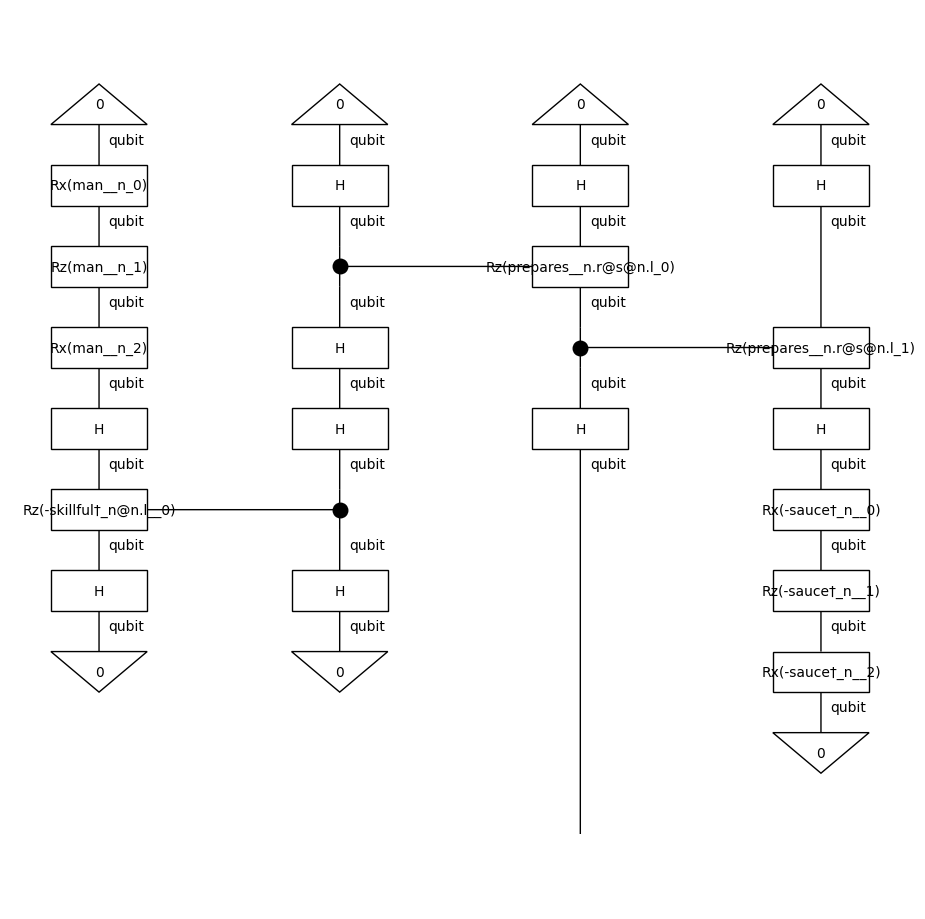

Create circuits¶

from lambeq import AtomicType, IQPAnsatz

ansatz = IQPAnsatz({AtomicType.NOUN: 1, AtomicType.SENTENCE: 1},

n_layers=1, n_single_qubit_params=3)

train_circuits = [ansatz(diagram) for diagram in train_diagrams]

dev_circuits = [ansatz(diagram) for diagram in dev_diagrams]

test_circuits = [ansatz(diagram) for diagram in test_diagrams]

train_circuits[0].draw(figsize=(9, 9))

Parameterise¶

from pytket.extensions.qiskit import AerBackend

from lambeq import TketModel

all_circuits = train_circuits+dev_circuits+test_circuits

backend = AerBackend()

backend_config = {

'backend': backend,

'compilation': backend.default_compilation_pass(2),

'shots': 8192

}

model = TketModel.from_diagrams(all_circuits, backend_config=backend_config)

Define evaluation metric¶

from lambeq import BinaryCrossEntropyLoss

# Using the builtin binary cross-entropy error from lambeq

bce = BinaryCrossEntropyLoss()

acc = lambda y_hat, y: np.sum(np.round(y_hat) == y) / len(y) / 2 # half due to double-counting

Initialize trainer¶

from lambeq import QuantumTrainer, SPSAOptimizer

trainer = QuantumTrainer(

model,

loss_function=bce,

epochs=EPOCHS,

optimizer=SPSAOptimizer,

optim_hyperparams={'a': 0.05, 'c': 0.06, 'A':0.01*EPOCHS},

evaluate_functions={'acc': acc},

evaluate_on_train=True,

verbose='text',

seed=0

)

from lambeq import Dataset

train_dataset = Dataset(

train_circuits,

train_labels,

batch_size=BATCH_SIZE)

val_dataset = Dataset(dev_circuits, dev_labels, shuffle=False)

Train¶

trainer.fit(train_dataset, val_dataset, log_interval=12)

Epoch 12: train/loss: 2.1017 valid/loss: 0.8054 train/time: 2m21s valid/time: 29.87s train/acc: 0.5929 valid/acc: 0.5167

Epoch 24: train/loss: 0.8344 valid/loss: 0.7573 train/time: 2m16s valid/time: 30.83s train/acc: 0.5786 valid/acc: 0.5500

Epoch 36: train/loss: 1.8189 valid/loss: 0.9036 train/time: 2m17s valid/time: 30.32s train/acc: 0.5286 valid/acc: 0.4667

Epoch 48: train/loss: 1.8901 valid/loss: 0.7692 train/time: 2m11s valid/time: 28.47s train/acc: 0.5857 valid/acc: 0.6500

Epoch 60: train/loss: 0.5390 valid/loss: 0.4898 train/time: 2m16s valid/time: 28.18s train/acc: 0.7571 valid/acc: 0.7333

Epoch 72: train/loss: 0.4840 valid/loss: 0.4777 train/time: 2m10s valid/time: 28.43s train/acc: 0.8000 valid/acc: 0.7333

Epoch 84: train/loss: 0.3344 valid/loss: 0.4273 train/time: 2m14s valid/time: 28.68s train/acc: 0.8071 valid/acc: 0.8333

Epoch 96: train/loss: 0.4518 valid/loss: 0.4237 train/time: 2m12s valid/time: 29.25s train/acc: 0.8286 valid/acc: 0.7667

Epoch 108: train/loss: 0.3554 valid/loss: 0.4414 train/time: 2m12s valid/time: 28.41s train/acc: 0.8714 valid/acc: 0.7667

Epoch 120: train/loss: 0.2696 valid/loss: 0.4609 train/time: 2m12s valid/time: 28.06s train/acc: 0.7857 valid/acc: 0.7333

Training completed!

train/time: 22m20s train/time_per_epoch: 11.17s train/time_per_step: 3.72s valid/time: 4m50s valid/time_per_eval: 2.42s

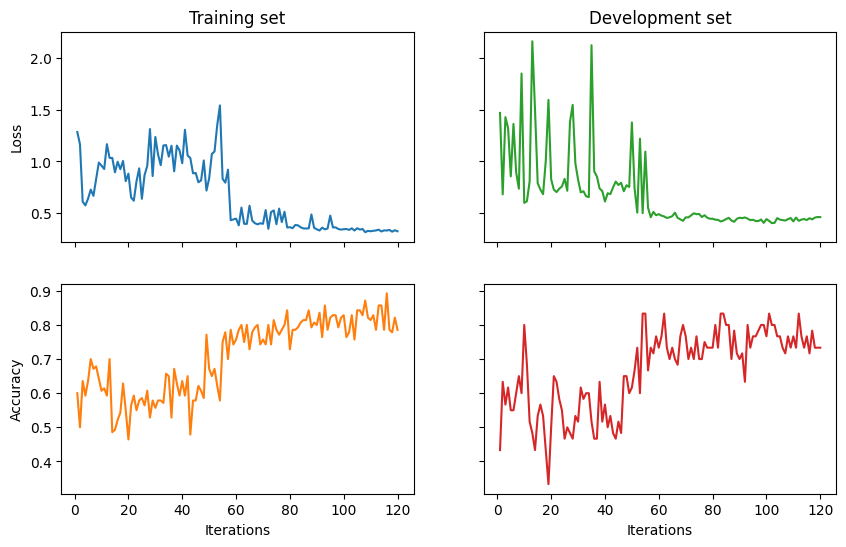

Show results¶

import matplotlib.pyplot as plt

fig, ((ax_tl, ax_tr), (ax_bl, ax_br)) = plt.subplots(2, 2, sharex=True, sharey='row', figsize=(10, 6))

ax_tl.set_title('Training set')

ax_tr.set_title('Development set')

ax_bl.set_xlabel('Iterations')

ax_br.set_xlabel('Iterations')

ax_bl.set_ylabel('Accuracy')

ax_tl.set_ylabel('Loss')

colours = iter(plt.rcParams['axes.prop_cycle'].by_key()['color'])

range_ = np.arange(1, trainer.epochs + 1)

ax_tl.plot(range_, trainer.train_epoch_costs, color=next(colours))

ax_bl.plot(range_, trainer.train_eval_results['acc'], color=next(colours))

ax_tr.plot(range_, trainer.val_costs, color=next(colours))

ax_br.plot(range_, trainer.val_eval_results['acc'], color=next(colours))

test_acc = acc(model(test_circuits), test_labels)

print('Test accuracy:', test_acc)

Test accuracy: 0.8166666666666667